A KG-GNN-LLM Framework for Latent Space Context Synthesis

An LLM plug and play framework for factual groundedness. Experiments were done on a 3070 GPU, tight memory and compute constraints. We showed that we can train gnns to generate knowledge-graph derived context. We hope that scaling up the model will allow for more complex reasoning.

Analyzing the Effect of Fourier Features in MRI Classification Models

We can already get >99% classification accuracy with state-of-the-art convolutional models. We wanted to see what happens during training when we add Fourier features to the input data, so we created this visualization.

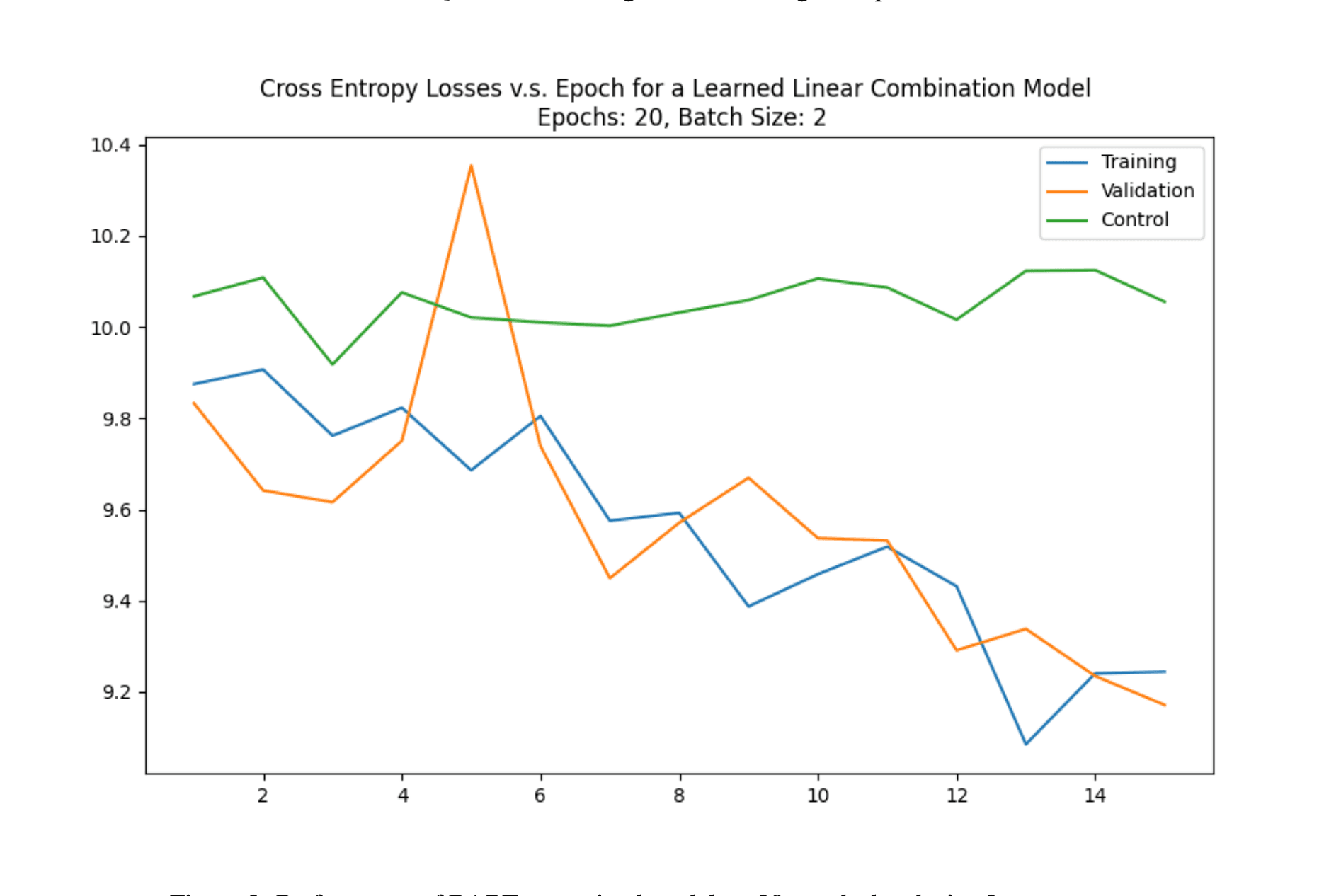

Continuous Prompt Generation from Linear Combination of Discrete Prompt Embeddings

We trained a neural model to linearly combine sentence embeddings to produce latent prefix material which improves performance on the sequence-to-sequence task with BART. I think this area of reducing problem complexities in terms of linear algebra is promising for interpretability.